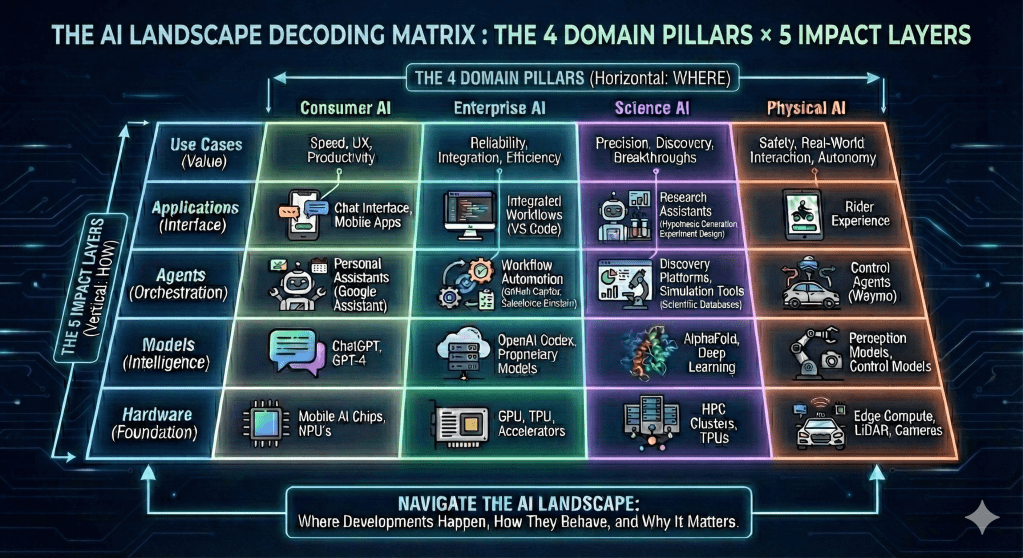

A Simple Mental Model — How I Break the AI World into 4 Pillars

Introduction

In my previous post, I had shared about the need to shift from Tactical Thinking (chasing tools) to Structural Thinking (understanding the landscape) to understand the AI Landscape. In this post, we will build the foundation of that structure.

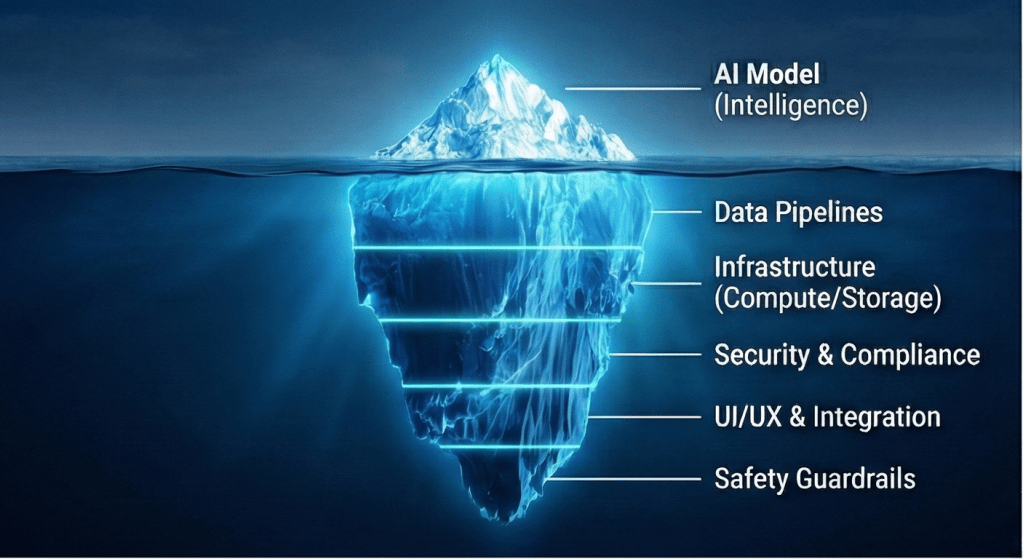

When we talk about “Applied AI,” it is easy to get fixated on the “AI” part—the models, the algorithms, the neural networks. But in the real world when we try to adopt AI, the model is often just one part of the equation.

Applied AI is not just about Models; it is a system.

To make AI work, you need more than just intelligence. You need data pipelines, user interfaces, safety guardrails, integration logic, and hardware infrastructure. You need to consider the human who uses it and the environment where it operates. When you look at the full picture, you realize that “AI” is just one ingredient in a complex recipe. And just like in cooking, the same ingredient (AI) produces a completely different result depending on what else you mix it with and how you serve it.

The Core Concept: Why “AI” Is Not One Thing

The biggest mistake organizations and individuals make is treating AI as a monolithic wave—assuming that the same rules, timelines, and strategies apply everywhere. They ask generic questions like “When will AI replace jobs?” or “Is AI safe?”

These questions do not have a simple straight forward answer because since adopting AI is not just focusing on one thing ie “AI”.

The Analogy: The Engine vs. The Vehicle

Consider an AI model (like GPT-4 or Claude) as a high-performance engine. An engine is a sophisticated core component, yet it provides no transportation utility on its own. To function effectively, it requires a chassis, wheels, a steering system, and an operator. It must be integrated into a complete vehicle.

Imagine attempting to solve every transportation challenge with a single strategy: “Install a high-performance sports car engine.”

- On a racetrack (Consumer): This approach works perfectly; speed is the primary objective.

- Plowing a field (Enterprise/Industrial): A high-revving engine is ineffective; the requirement is torque, traction, and sustained power under load.

- Transporting cargo across an ocean (Logistics): Raw speed is irrelevant compared to fuel efficiency, durability, and massive scale.

- Exploring the surface of Mars (Frontier/Science): A standard combustion engine will fail instantly due to environmental constraints; the need is for rugged autonomy and specialized engineering.

This is exactly how Applied AI works. The “Engine” (the intelligence) might be similar across different use cases, but the “Vehicle” (the application) must be radically different depending on the terrain. Some times even the Engine has to be modified for some use cases.

This principle applies directly when rolling out AI driven applications. Different applications require fundamentally different architectures, not just different features. Cotninuing with the vehicle anology below section talks about how we can map the 4 AI pillars to different vehicle type:

- Consumer AI (The Sports Car): Optimized for high velocity, agility, and individual engagement. The priority is reducing user friction and maximizing experience.

- Enterprise AI (The Freight Locomotive): Engineered for massive scale, unwavering reliability, and strict governance. The priority is secure, consistent throughput on defined rails.

- Science AI (The Deep-Sea Submersible): Purpose-built for extreme precision in unexplored environments. The priority is navigating high-complexity domains to extract novel insights rather than speed.

- Physical AI (The Industrial Rover): Designed for real-world interaction where the cost of failure is physical. The priority is safety, sensor integration, and navigating dynamic, unstructured environments.

If you try to apply “Sports Car” thinking to a “Cargo Train” problem, you will crash. This is why we need to break the AI landscape into 4 Pillars.

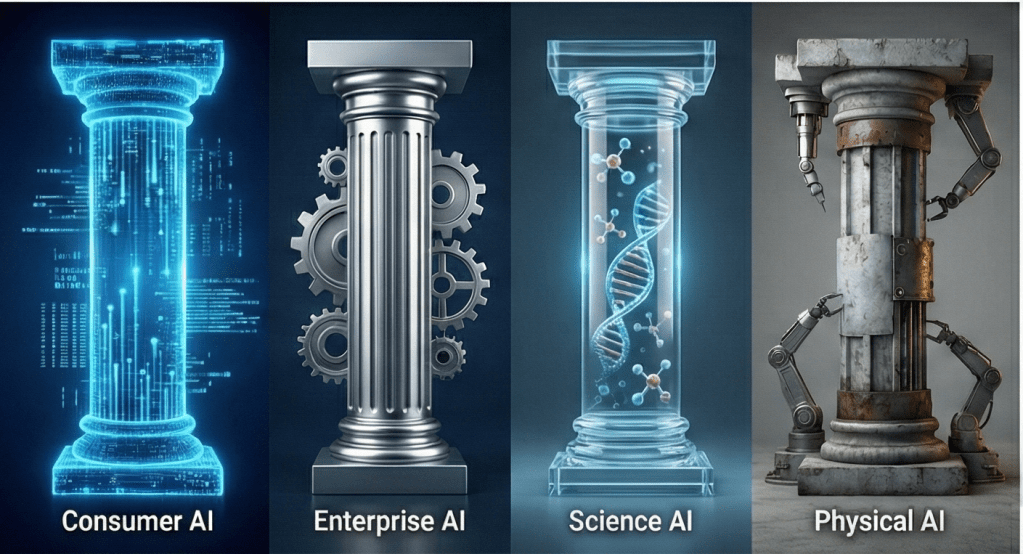

The 4 Pillars of Applied AI

Now that we have explored the vehicle analogy, it is clear why AI cannot be treated as a single entity when adopting and applying it. The architecture, stack, and strategy must vary based on fundamentally different challenges: speed vs. reliability, user delight vs. regulatory compliance, and digital outputs vs. physical safety. We can categorize these adoption patterns into four distinct pillars.

Pillar 1: Consumer AI

This is the AI that touches our daily lives. It is fast, personal, and often creative.

- The Goal: Enhance individual productivity, creativity, or entertainment.

- The Constraint: User Experience (UX) and Latency. If it takes 10 seconds to reply, users walk away. If it’s hard to use, they ignore it.

- The “Vehicle Anology”: The Sports Car. It’s about speed, style, and the driver’s feeling.

- Real-World Examples:

- ChatGPT / Claude: Chatbots that help you write emails or plan trips.

- Midjourney: Tools that generate art from text.

- Siri / Alexa: Voice assistants that manage your home.

Pillar 2: Enterprise AI

This is the AI that powers businesses and organizations. It is serious, governed, and integrated.

- The Goal: Automate processes, analyze data, and augment knowledge work at scale.

- The Constraint: Accuracy, Security, and Integration. A chatbot that hallucinates a discount code is annoying; a financial AI that hallucinates a revenue number is a lawsuit. It must connect securely to internal data.

- The “Vehicle Anology”: The Cargo Train. It carries a heavy load, runs on fixed rails (processes), and reliability is more important than 0-60 mph speed.

- Real-World Examples:

- Customer Support Bots: Systems that handle thousands of refund requests automatically.

- Code Copilots: Tools that help developers write secure code faster.

- Legal Document Analysis: AI that reviews contracts for risks.

Pillar 3: Science & STEM AI

This is the AI that pushes the boundaries of human knowledge. It is precise, computationally expensive, and transformational.

- The Goal: Accelerate discovery in biology, physics, chemistry, and math.

- The Constraint: Precision and Complexity. “Good enough” isn’t acceptable here. The AI must model the laws of physics or biology accurately.

- The “Vehicle Anology”: The Deep-Sea Submersible or Space Rover. It goes where humans physically cannot, exploring the unknown depths of data.

- Real-World Examples:

- AlphaFold: AI that predicts protein structures, revolutionizing biology.

- Weather Forecasting Models: AI that predicts extreme weather events with higher accuracy than traditional physics models.

- Material Science Discovery: AI finding new battery materials.

Pillar 4: Physical AI

This is the AI that leaves the screen and enters the real world. It is the hardest pillar because the real world is messy and unforgiving.

- The Goal: Interact with physical objects, navigate environments, and perform manual tasks.

- The Constraint: Safety and Physics. If a chatbot makes a mistake, you get bad text. If a robot makes a mistake, it breaks something or hurts someone.

- The “Vehicle Anology”: The Industrial Robot or Autonomous Truck. It must be rugged, aware of its surroundings, and fail-safe.

- Real-World Examples:

- Waymo / Tesla FSD: Autonomous vehicles navigating traffic.

- Warehouse Robots: Amazon’s robots moving packages.

- Humanoid Robots: Emerging robots designed to fold laundry or work in factories.

Why This Distinction Matters

You might ask, “Why not just categorize AI by what it does—like Text AI vs. Image AI?”

Categorizing by modality (text, image, video) tells you what the tool is, but it doesn’t tell you how to manage it. A text model used to write a poem (Consumer) behaves completely differently from a text model used to summarize a medical record (Enterprise).

By categorizing by Pillar, you gain a clearer understanding of what to expect. You can immediately identify the constraints, timelines, and success metrics that apply to your specific AI project.

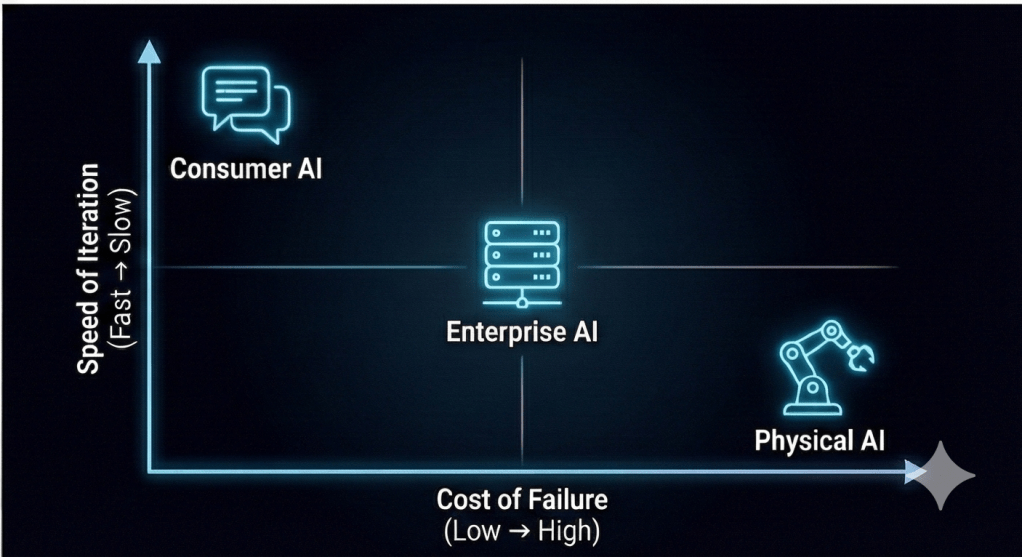

1. Different Speeds of Innovation

- Consumer AI moves at the speed of software. New apps launch weekly.

- Physical AI moves at the speed of hardware and safety regulation. It takes years to certify a robot or a self-driving car.

- Mistake to Avoid: Don’t get frustrated that your warehouse robots aren’t improving as fast as ChatGPT. They are in a different pillar with different friction.

2. Different Measures of Success

- Consumer AI is measured by engagement and delight.

- Enterprise AI is measured by ROI, accuracy, and cost-savings.

- Science AI is measured by breakthroughs and new knowledge.

- Mistake to Avoid: Don’t judge a scientific model by its user interface, or an enterprise tool by how “fun” it is to chat with.

3. Different Risk Profiles

- If a Consumer image generator makes a weird picture, it’s a meme.

- If an Enterprise legal bot hallucinates a clause, it’s a liability.

- If a Physical robot fails, it’s a safety hazard.

When you know which pillar a project belongs to, you can immediately anticipate:

- What constraints will dominate (speed? safety? accuracy?)

- What stakeholders will be involved (users? regulators? scientists?)

- What timeline is realistic (weeks? months? years?)

- What failure modes to expect (bad UX? compliance issues? physical harm?)

Instead of discovering these answers the hard way—through trial and error—the pillar framework lets you predict them upfront. This is the “predictive power” of structural thinking: you’re not just reacting to problems; you’re anticipating them before they occur.

When you understand which pillar you are operating in, you stop applying the wrong rules to the game. You stop trying to drive a tractor like a Ferrari.

This clarity transforms how you approach any AI initiative. Rather than asking the vague question “How do we adopt AI?”, you can now ask the precise question: “Which pillar does this project belong to, and what does that tell us about how to execute it?”

For example, if your company wants to build an internal knowledge assistant for employees, you know immediately that you are in the Enterprise pillar. This means:

- You will need to prioritize data security and access controls from day one

- The AI must integrate with your existing identity management and document systems

- Hallucinations are not just annoying—they could spread misinformation across your organization

- Your success metric is not “how engaging is the chat” but “how much time did we save” and “how accurate are the answers”

- You should expect a 3-6 month rollout, not a weekend prototype

Contrast this with building a creative writing assistant for novelists, which sits in the Consumer pillar. There:

- Speed and personality matter more than perfect accuracy

- Users expect a delightful, intuitive interface

- Your success metric is user retention and satisfaction

- You can iterate weekly based on user feedback

The same underlying language model could power both applications, but the vehicles you build around that engine are completely different. The pillar framework gives you this insight before you write a single line of code or sign a single vendor contract.

Summary

In this post, we established the first fundamental layer of structural thinking: the 4 Pillars of Applied AI—Consumer, Enterprise, Science, and Physical. We explored how the same underlying AI “engine” produces radically different outcomes depending on the “vehicle” it powers. Most importantly, we learned that knowing which pillar your project belongs to allows you to predict its constraints, stakeholders, timelines, and failure modes before you begin.

But identifying the right pillar is only half the story. Even within a single pillar, AI projects succeed or fail based on how well the underlying layers—from hardware to models to applications—work together. In the next post, “The Impact Layers — How AI Progress Actually Happens,” we will dive beneath the surface to explore the 5-layer stack that determines whether AI potential translates into real-world value, and why even the smartest model can fail if a single layer is weak.

References

- Previous Blog in Series : Blog 1: Why AI Feels Overwhelming — And Why That’s the Wrong Way to Look at It

- Next in Series : Blog 3: The Impact Layers — How AI Progress Actually Happens (Coming Soon)

Author’s Note: AI-assisted writing tools were used to support the creation of this post. All concepts, perspectives, and the underlying thought process originate from me; the AI served only as a drafting and refinement aid